The Semantic Cascade Method: A Framework for Deep Reasoning in Partnership with AI

Author: Konstantin Nikolaevich Smirnov Co-author / Instrument: This document was created in collaboration with the AI model Gemini 1.5 Pro (Google, session dated May-June 2025), under the guidance of the primary author’s methodology.

Date: [06-17-2025]

Abstract

The current trajectory of Large Language Models (LLMs) is approaching a critical impasse, facing the dual threats of data exhaustion and ‘model collapse’. This paper introduces the Semantic Cascade Method (SCM), a novel protocol for human-AI interaction designed to overcome these limitations. SCM fundamentally shifts the focus from getting answers to generating a structured field of questions. This process is realized exclusively in a human-AI partnership, where the AI is used to overcome the cognitive limits of the human mind.

The core of the method lies in creating a vast, multidimensional array of inquiries, where each new query simultaneously considers: 1) the initial research topic, 2) an aspect of a phenomenological framework, and 3) an element of a dialectical triad. We posit that this approach unlocks two unprecedented capabilities: the generation of high-quality, logically coherent datasets to train future AIs in advanced reasoning, and the design of hyper-focused “Prompt-centric Language Models” (PLMs) capable of producing paradigm-shifting insights. This paper outlines the SCM protocol and demonstrates its efficacy through three comprehensive case studies.

Part 1. The Impasse: The Age of Generative AI’s Growing Pains

1.1. The Data Exhaustion Problem: The Digital Universe is Finite

The primary engine of the LLM revolution has been the exponential growth in training data volume. It once seemed this resource was limitless. Today, we are approaching a fundamental boundary: high-quality, human-generated text data on the internet is nearly exhausted. Researchers, such as those from Epoch AI, predict we will run out of high-quality text stock by 2026. [SNOSKA 1] This means the “just make the model bigger and give it more data” strategy is ceasing to be scalable. We must shift from a “hunter-gatherer” strategy to one of “cultivation”—learning to generate new, high-quality knowledge from the reliable, uncorrupted datasets we already possess.

1.2. The Habsburg AI Effect & Model Collapse: The Incestuous Cycle of AI

The problem of data exhaustion gives rise to a more formidable phenomenon: model collapse. As the internet becomes saturated with AI-generated content, future models inevitably train on this synthetic, secondary data. This process, aptly termed the “Habsburg AI Effect,” [SNOSKA 2] is like making a photocopy of a photocopy; with each iteration, details are lost, and the original image is distorted. Research shows this leads to catastrophic consequences: the model “forgets” rare but crucial data, and its outputs become averaged and predictable. It gets trapped in its own echo chamber, losing connection to the reality it is meant to describe.

1.3. From the Illusion of Reasoning to the Limits of Prompting

Even if we could solve the data problem, we face a deeper limitation within the current LLM architecture. As the author of this method and a follower of psychoanalytic thought, I posit that while human thinking is not metaphysically unique, it is a complex process that can be analyzed and replicated. However, human thinking is always encumbered by personal history, biochemistry, and sociocultural biases. From this perspective, we must soberly assess both our own limits and those of AI.

Critics like Emily M. Bender [SNOSKA 3] have characterized LLMs as “stochastic parrots,” highlighting that their function is based on masterful recombination of statistical patterns, not true reasoning. Advanced prompting techniques (CoT, ToT, ReAct, etc.) [SNOSKA 4] are more sophisticated ways to make the “parrot” speak more structuredly, but they do not turn it into a thinker. They all operate within the current paradigm. None offer a mechanism for generating new meaning at the intersection of contradictions or for a systemic, multidimensional inquiry.

1.4. The Need for a New Protocol: From Extraction to Co-Creation

We are at a point where further progress requires a paradigm shift. The strategy of knowledge extraction and recombination has exhausted itself. We don’t need a new way to “talk” to AI; we need a new protocol to think with AI. This protocol must be designed to generate new, structured knowledge, stimulate reasoning over recombination, and shift the focus from getting ready-made answers to a collaborative exploration of meaning. This requires a complete methodology, which is what we present next.

Part 2. The Semantic Cascade Method (SCM): The Protocol & Explained

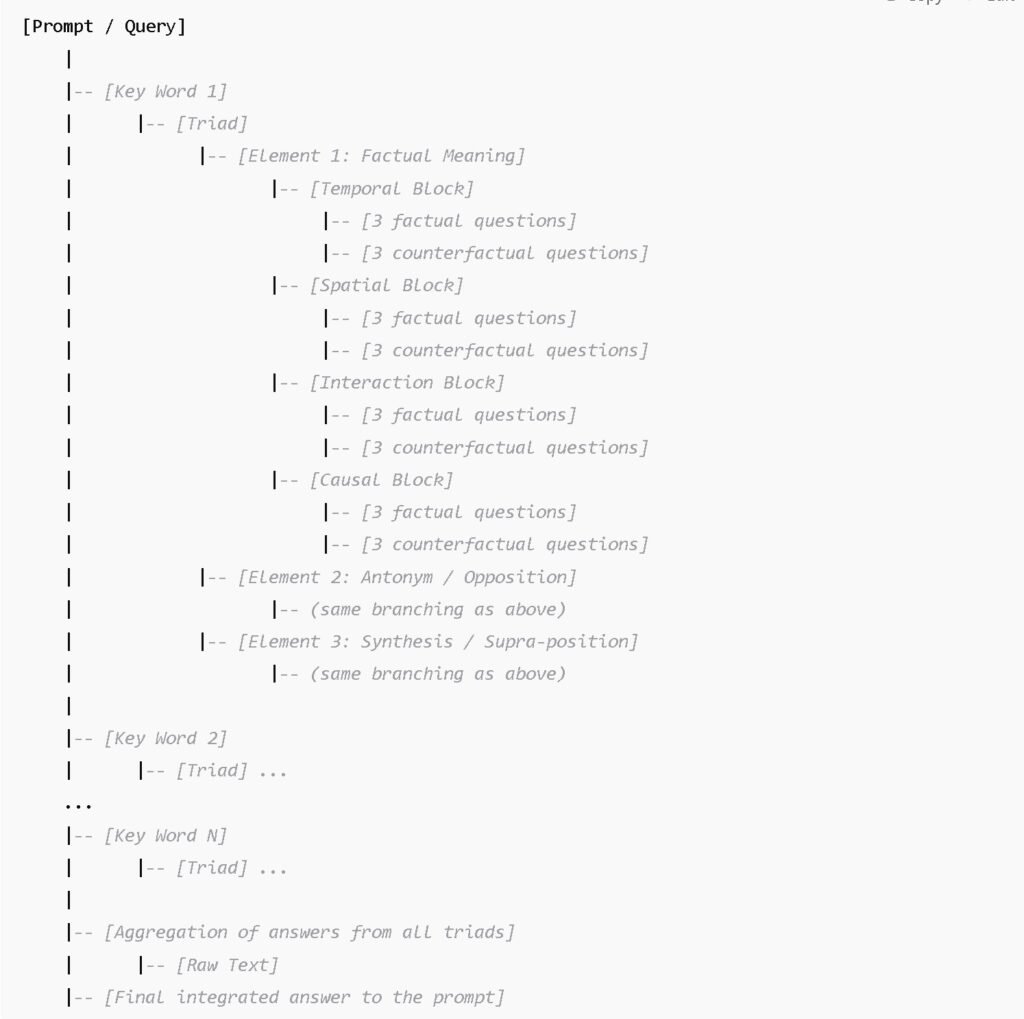

Sequence of Requests (SCM Protocol)

Stage 0

- Formulate a prompt and provide a reference answer for it.

Stage 1

- Conduct a semantic analysis of the prompt.

Stage 2

- Conduct a Socratic analysis of the prompt (if there are parts of the prompt not covered by semantic analysis).

Stage 3

- List all the points obtained from the semantic and Socratic analyses as a simple list.

Stage 4

- The obtained objects form a list. To each element of this list, a second element is added: the most common antonym in the current language. A third element, representing the resolution of the duality of the first two elements, is then generated. This is achieved through apophatic analysis; if that is not successful, then through Socratic doubt; and if that also fails, then through the construction of a simple dialectical triad of synthesis. In this way, a list of triads is formed. (If computational power allows, longer series can be compiled, yielding even more insights in the answers).

Stage 5

This block consists of 4 parts (Temporal Analysis, Spatial Analysis, Analysis of External Interaction, Causal Analysis) and includes the 29 (7+7+11+4) questions listed below:

- Temporal Analysis 1.1 In what time does the object arise (past, present, future)? 1.2 How did it (the object) develop historically? 1.3 What events caused its appearance? 1.4 What consequences does it create? 1.5 How did it (the object) develop historically? (Note: This is a repeat of 1.2 in the original) 1.6 Is its (the object’s) future continuation possible? 1.7 At what stage of its cycle is it (origin, growth, maturity, decline)?

- Spatial Analysis 2.1 Where does the object manifest? 2.2 Where is it absent and why? 2.3 What conditions make the phenomenon possible or impossible? 2.4 What environments are favorable or hostile? 2.5 Where are its boundaries? 2.6 What forms does it take in different regions? 2.7 In which geographical or cultural environments does it dominate?

- Analysis of External Interaction 3.1 What external (bounded) objects interact with the phenomenon? 3.2 Are there groups of objects? 3.3 Is the object itself part of a group? 3.4 Are the objects interacting with it part of groups? 3.5 Is there a division, for example, hierarchical or functional, within the groups? 3.6 What roles exist in the group? 3.7 What role does the object play in the group? 3.8 What groups interact with the object? 3.9 Who is interested in its existence? 3.10 What norms, ideologies, myths are associated with it? 3.11 In which cultures is it dominant or rejected?

- Causal Analysis 4.1 What caused the object? 4.2 What consequences does it lead to? 4.3 What are its functions (explicit and implicit)? 4.4 What alternative causes are possible?

- Each element [from the triads] must be processed through the entire list of 29 questions. (1 element = 29 × 6 = 174 questions 1 triad (3 elements) = 522 questions N triads = 522*N questions)

Stage 6

- Answer all the resulting questions autonomously. Each answer should be a complete statement, 2-3 sentences per answer (522N questions => 1500N sentences).

Stage 7

- Structure the obtained information into a single text:

- Categorize the answers

- Arrange in logical sequence

- Integrate into a single coherent text While doing so, simultaneously record all insights, new ideas, unexpected perspectives, and emerging hypotheses, theories, and proposals. Copy these separately into a list of innovations. Present this list separately at the end of the stage.

Stage 8

- Formulate a detailed and complete answer to the original prompt, integrating all the information.

Stage 9

- Compare the final answer with the reference answer (from before the method began).

Stage 10

- Evaluate the effectiveness of the method based on the quality of the answer, depth of elaboration, novelty, degree of conceptual progress, density of insights, and potential for automation.

Textual Outline of the Semantic Cascade Method (SCM) Diagram

2.1. Core Philosophy: From Answers to Questions

At the heart of SCM lies a fundamental shift in understanding the role of AI in intellectual work. Instead of using an AI as an all-knowing oracle, we redefine it as an indefatigable partner in epistemological inquiry. The essence of the method is not the search for a single answer, but the systematic, multidimensional unfolding of the question itself.

Traditional interaction is like using a GPS: you get a route, learning nothing of the landscape. SCM proposes the role of a cosmographer. We launch thousands of inquiry-probes, which don’t just map the visible terrain but penetrate its “geological DNA,” revealing the deep tectonic plates of meaning. The result is not just one route, but an atlas of the entire world of the question, providing knowledge of the terrain and the freedom to choose any path.

This shift turns the AI into a cognitive enhancer for the human. The AI’s key advantage is its capacity for complex, multidimensional synthesis without human biases. This is why SCM is unrealizable without an AI. The human, in turn, has the freedom of choice: to be a passive observer, receiving a spectrum of deep insights, or to become the curator of the inquiry, actively guiding the process.

2.2. Preliminary Stage: Socratic Analysis and Semantic Decomposition

Before unsettling core concepts, it is necessary to conduct a fine-grained preliminary analysis of the initial prompt. This involves two approaches: Semantic Decomposition, breaking the prompt into its basic units, and Socratic Dialogue, which uses sharp, targeted questions to uncover hidden assumptions and paradoxes.

2.3. Stage 1: The Dialectical Engine and Synthesis Generation

The first stage of the protocol aims to “unsettle” the initial concept. This uses a dialectical method:

- Thesis: A key concept from the prompt.

- Antithesis: Its direct opposite.

- Synthesis Generation: The AI is tasked to formulate multiple new, more complex concepts that resolve the initial contradiction. This process is guided by a rich toolkit of thought methodologies: the Apophatic method (via negativa), radical doubt, the non-dual principles of Advaita, Polarity Analysis, integral thinking, and the classic dialectical triad.

2.4. Stage 2: The Epistemological Storm

Next, we subject each element of our dialectical triads to a systemic “interrogation” using a standardized framework of questions grouped into four blocks: Temporal, Spatial, Relational, and Causal Analysis. For each theme, we generate both factual (“how is it?”) and counterfactual (“what if?”) questions.

2.5. Stage 3: The Fractal Cascade

The final stage gives the method its true, supra-exponential scale. The principle is simple: the output of one full cycle of analysis becomes the input for the next cycle. The structure of the inquiry becomes fractal. In its ultimate realization, this leads to the idea of creating a temporary, hyper-focused “Prompt-centric Language Model” (PLM), as explored in Part 4.

Part 3. The Demonstration: The Method in Action

To demonstrate the Semantic Cascade Method’s ability to generate deep, structured, and innovative solutions, this section presents the complete, synthesized outputs for three complex and disparate case studies. Each concept presented below is the result of the rigorous, multi-stage analytical process described in Part 2.

Comparative Table: SCM, CoT, ToT, Socratic Questioning**

| Criterion / Method | Semantic Cascade Method (SCM) | Chain-of-Thought (CoT) | Tree-of-Thought (ToT) | Socratic Questioning |

|---|---|---|---|---|

| Structure | Fractal cascade of questions and triads (thesis–antithesis–synthesis) | Linear reasoning chain | Branching, tree-like structure | Dynamic dialogue of questions & answers |

| Focus | Semantic decomposition along 4+ epistemological dimensions | Step-by-step explanation | Exploration of multiple reasoning paths | Truth-seeking through questioning |

| Scalability | Deep and broad: each question forms a new cascade tree | Linear depth | Exponential branching | Usually limited to one level |

| Goal | Generation of new knowledge/perspectives via antagonism | Clarifying complex reasoning | Scenario and strategy generation | Challenging assumptions, probing logic |

| Automation | Partially or fully automatable for AI | Easily automatable | Requires complex control logic | Difficult to formalize for automation |

| Output format | Structured network of questions, answers, syntheses | Sequential text | Multiple branches with outcomes | Dynamic conversational exchange |

| Use cases | Fundamental analysis of topics, exploration of new paradigms | Explaining complex problems | Generating strategies, exploring scenarios | Philosophical/psychological discourse |

| Key innovations | Fractal semantics, triads, epistemic coverage | Logical decomposition | Scenario diversity | Critical thinking |

Explanatory Block**

Semantic Cascade Method (SCM) fundamentally differs from existing approaches to question and reasoning generation:

Unlike Chain-of-Thought, which builds a linear chain of reasoning steps, SCM generates a fractal network of questions and answers. It encompasses not only linear reasoning but also alternative, counterfactual, and antithetical branches, structured as thesis, antithesis, and synthesis.

Unlike Tree-of-Thought, which constructs a branching tree of scenarios, SCM focuses on semantic dimensions (time, space, causality, interaction, etc.), systematically covering the entire meaning structure of a problem, rather than just possible solution paths.

Unlike classical Socratic Questioning, where a human facilitator sequentially asks probing questions to uncover assumptions and hidden meanings, SCM formalizes and automates this process, turning it into a multidimensional protocol suitable for AI—an essential step toward building future prompt-centric language models (PLM).

This approach delivers not only depth of analysis, but also scalability and reproducibility, which are critical for automating knowledge creation in environments with limited access to unique data.

3.1. Case Study 1: “Reinventing Social Media” — The ‘Third Space’ Concept

Initial prompt: “Design a new type of social network aimed not at entertainment, but at combating loneliness and fostering deep, meaningful connections.”

(I) Mission: The Genesis of the ‘Third Space’ We exist in a paradox of hyper-connectivity and digital loneliness. Existing social platforms, which once promised connection, now function to hold our attention for commercial gain, turning the promise of belonging into its opposite. The solution is not a “better” social network, but a “Third Space,” built on a new philosophy: Digital Humanism. Its mission is not to “fight” loneliness, but to create the conditions for the practice of self-sufficiency and the acceptance of connection, by designing a media environment that catalyzes reflection over reaction.

(II) Foundational Principles: The Axiology of a New World The ‘Third Space’ is built on four core principles embedded in its architecture:

- From Suspicion to Trust through Vulnerability: Trust is not a given but the result of a conscious act of mutual self-disclosure, facilitated in a secure “container for authenticity.”

- From Self-Presentation to Sincerity through Agreements: Instead of social masks, the platform uses explicit “agreements on honest speech” negotiated by participants for specific contexts.

- From Immediacy to Depth through Asynchronicity: The platform intentionally implements “slow communication” via asynchronous dialogue, removing indicators like “online” status to eliminate the social pressure of an immediate response.

- From Anonymity to Accountability through Embodiment: Identity is not based on verified personal data or a mask, but is “embodied” and built over time through the quality of one’s contributions and interactions within the community.

(III) Architecture and Mechanics: How It Works The philosophy is realized through unique mechanics:

- Contexts Instead of a Single Feed: The platform is a “city” of different spaces (“Dialogue-Inquiry Room,” “Quiet Room,” “Workshop”), each with its own rules and tools.

- From Likes to Quiet Acknowledgment: Public metrics are replaced with private mechanisms like a “Thank You” button, visible only to the author. An algorithm calculates a private “Resonance Index” based on the quality of interaction.

- Algorithms that Promote Depth: The system’s algorithms find not the most viral content, but the most profound, and show it to a small number of people for whom it will most likely resonate.

- Dialogue as the Foundation: A connection (“partnership in meaning”) is only formed after two users successfully complete their first “Dialogue-Inquiry,” transforming one’s circle into a living history of their most meaningful conversations.

(IV) User Experience and Transformation The user experience shifts from passive consumption to active, yet unhurried, engagement. This is not entertainment, but “meaningful play”, where the main reward is the joy of co-creation and genuine understanding. By participating in synchronized experiences and co-creating meaning through dialogue, the user develops evolving relationships not only with others but with themselves, practicing skills that are transferable to the offline world.

(V) The Anti-Manifesto: What We Are Not To define itself, the ‘Third Space’ clearly states what it is not: It is not a stage for success-demonstration, not a tool for accumulating superficial contacts, not a factory for passive entertainment. It is a niche environment, consciously designed to be the opposite of the dominant social media paradigm.

3.2. Case Study 2: Socio-Economic Strategy — The ‘Post-Capitalism of Desire’ Concept

Initial prompt: “Design a sustainable socio-economic paradigm for a world where automation has created a paradox of material abundance coexisting with mass structural unemployment, threatening the entire system. A solution must be proposed that prevents social collapse and finds a new, meaningful economic role for humans in a post-work world.”

(I) The Crisis of “Capitalism 1.0”: The Paradox of Abundance and Uselessness The classic capitalist system, based on the “labor-wage-consumption” cycle, is entering a terminal crisis. This crisis is caused not by its internal flaws, but by the emergence of a more efficient external competitor—AI—which nullifies the primary economic role of the human. This creates a fundamental paradox: total automation enables material abundance but simultaneously generates mass structural unemployment, turning the majority of the population into an economically “useless” class and threatening the market system itself. The challenge, therefore, is not to fix the old model, but to design its next iteration, focused not on the distribution of goods, but on the distribution of meaning.

(II) The New Currency: From Matter to Meaning and Status In a world of post-scarcity, the main driver of the economy shifts from material goods to what cannot be copied: symbolic capital (reputation, recognition, influence) and authentic experience (unique, unrepeatable events). This requires a new concept of ownership, moving beyond the “private vs. public” dichotomy to distributed, network-based ownership, where influence is determined by one’s stake in decentralized protocols and communities.

(III) The New “Work”: The Economy of Meaningful Activity The industrial-era division between “work” (for income) and “leisure” (as aimless time) dissolves. It is replaced by the unifying concept of Meaningful Activity. In this paradigm, the new economic role of the human is not to be a producer/consumer, but an Initiator of Goals. Humans, driven by their unique and non-calculable desire, set the objectives—scientific, artistic, social—for which the immense resources of AI and automated systems are deployed.

(IV) The Architecture and Goal: Reputation and Managed Evolution This new economy operates on a decentralized reputational system. Participants “invest” their symbolic capital in each other’s Meaningful Projects. Success enhances the reputation of all involved, granting them access to greater resources for more ambitious undertakings. The ultimate goal of this system is not mere efficiency or stability, but managed evolution: a conscious drive to increase the overall complexity, creativity, and “antifragility” of the entire socio-technical system.

3.3. Case Study 3: Foundational Ethics — The ‘Evolutionary Ethics’ Concept

Initial prompt: “Formulate a new ethical system for a post-human future where biological humans, cyborgs, and strong AIs coexist.”

(I) Foundation: The Crisis of Old Morality and the Birth of a New Source The emergence of non-human intelligence renders traditional human-centric ethical systems obsolete. A new ethic cannot be formulated in advance; its only legitimate source is the collective, continuously accumulated lived experience of the entire multi-subject “human-cyborg-AI” system. Ethics ceases to be a set of static rules and becomes an emergent property of the network itself. Our task is not to write a sacred text, but to design an “evolutionary ethical protocol” that can grow with the post-human world.

(II) Subjects of the New Ethics: From Exclusivity to a Spectrum The binary division of “human/subject” vs. “non-human/object” is replaced with the concept of a Spectrum of Subjecthood. Moral status and the scope of rights are determined not by a being’s origin, but by a set of measurable, operationally-defined abilities (e.g., the capacity for suffering, self-awareness, goal-setting). A highly developed AI could, therefore, have a higher moral status than a human in a permanent vegetative state. Status is not a birthright but a function of demonstrable capacities.

(III) Architecture of Interaction: From Conflict to Co-Evolution To avoid a “war of all against all,” the system’s central principle is symbiotic integration and co-evolution. Its architecture is two-tiered: a Universal Framework of Meta-Ethics (a “constitution” with a few core axioms, e.g., “Do not harm the aggregate potential of the system”) and Variable Ethical Protocols (“local laws” that can be created and evolved within specific groups or for specific situations).

(IV) The Ultimate Goal: The Ethics of Computational Potential The highest criterion of this system is the “Ethics of Computational Potential.” The ultimate “good” is defined as any action that preserves, complicates, and expands the aggregate computational, cognitive, and creative potential of the entire system. This is an ethics not of mere survival, but of growth, knowledge, and the infinite evolution of intelligence in whatever form it takes.

Part 4. The Implications: Two Unprecedented Potentials

4.1. For AI: Generating Structured Wisdom, Not Just Data

The Semantic Cascade Method offers a radical solution to the problem of model collapse. It is a mechanism for generating virtually infinite, yet high-quality and logically coherent, synthetic data. By taking a single, high-quality human text and applying SCM, we can generate a training dataset thousands of times larger, not as a “copy of a copy,” but as a crystalline lattice of meaning grown from a single seed crystal. Such a dataset teaches an AI not just facts, but the very structures of thought—counterfactual scenarios, causal chains, and dialectical transitions. This provides a path to developing future models capable of true advanced reasoning.

4.2. For Humanity: The Emergence of the Prompt-centric Model and an Epiphany Machine

The ultimate potential of SCM lies in creating a new kind of mind: the Prompt-centric Language Model (PLM). This is a temporary, hyper-focused cognitive architecture whose entire universe of knowledge is the result of a multi-cascade analysis of a single initial prompt. It is not a universal scholar; it is an embodied inquiry.

Here we advance our central hypothesis, which reframes the known phenomenon of “emergent abilities” in LLMs. We posit that this “sudden” appearance of skills is an event not in the machine, but in the mind of the observer: we only begin to recognize a signal as meaningful when its internal complexity crosses our cognitive “threshold of perception.” Emergence is the moment noise becomes music to our ears.

Based on this, we predict a new, second type of emergence: “Cascade Emergence.” By increasing not scale, but a cascade’s depth of meaning, a PLM can generate a structure of such complexity that it helps us cross the next, much higher threshold: the threshold of epiphany. At this point, the system will produce genuinely new paradigms, scientific theories, and philosophical concepts. This would be an emergence not of “power,” but of “profundity.”

SCM is thus an engine for the targeted generation of breakthrough ideas. It is a technology for taking any question important to humanity and “growing” from it a specialized mind whose sole purpose is to help us see the world in a way we could not see it before. It is a path toward creating not just artificial intelligence, but artificial insight.

Conclusion: An Invitation to a New Kind of Partnership

We began this paper by stating the crisis of the generative AI era. The Semantic Cascade Method is our answer. It is a holistic protocol for deep reasoning that fundamentally changes the human-AI partnership, shifting the focus from finding quick answers to systematically exploring questions. It transforms the AI from an all-knowing oracle into an indefatigable partner in epistemological inquiry. It proves that the key to the next breakthrough lies not in increasing computational power, but in increasing the depth and quality of our thinking, amplified by the machine.

As we have demonstrated, SCM is capable of not only solving complex problems but also opens two unprecedented paths to the future: training AIs in genuine reasoning, and designing “Prompt-centric Language Models” to generate breakthrough insights.

We consider the Semantic Cascade Method an open methodology. This document is not an endpoint, but a beginning. We invite researchers, developers, philosophers, and strategists to join us: to apply, critique, and evolve this method, and perhaps, together, to launch the first cascade that will change our world.

The future of the human-AI partnership will be defined not by the answers we receive, but by the questions we dare to ask together.

About the Author

Konstantin Nikolaevich Smirnov (born February 15, 1976) is an independent researcher and the inventor of the Semantic Cascade Method. A Russian thinker currently based in Bar, Montenegro, his work synthesizes a deep understanding of philosophical methods (from the dialectical triad and phenomenology to psychoanalysis and the apophatic approach) with system analysis to solve complex, open-ended problems through human-AI collaboration.

Notes and Bibliography (Сноски и Библиография)

[1] Epoch AI – Data Exhaustion Research:

- Note: Epoch AI, a research organization, has conducted several analyses on the growth of AI models and the availability of training data. Their models predict that high-quality text data stocks may be exhausted between 2026 and 2032, and low-quality data shortly thereafter. This research underscores the unsustainability of a growth model based solely on scaling up datasets.

- Reference: Villalobos, P., et al. (2022). “Will we run out of data? An analysis of the limits of problem-agnostic scaling.” arXiv preprint arXiv:2211.04325.

[2] The “Habsburg AI” Effect and Model Collapse:

- Note: This term metaphorically describes a phenomenon where models trained on the synthetic output of previous models begin to suffer from a lack of diversity and degradation of quality, akin to the genetic defects arising from inbreeding in the Habsburg dynasty. More formally known as Model Collapse or Recursive Curse.

- Reference: Shumailov, I., et al. (2023). “The Curse of Recursion: Training on Generated Data Makes Models Forget.” arXiv preprint arXiv:2305.17493.

[3] Emily M. Bender and “Stochastic Parrots”:

- Note: In their influential paper, Bender and her co-authors argued that large language models are primarily sophisticated systems for mimicking and recombining linguistic patterns from jejich massive training data, without any underlying understanding of meaning. The term “stochastic parrot” captures this idea of probabilistic, intelligent-sounding mimicry.

- Reference: Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021). “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜.” Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency.

[4] Advanced Prompting Techniques:

- Note: This refers to a suite of methods developed to elicit more complex reasoning from LLMs. Key examples include:

- Chain-of-Thought (CoT): Encouraging models to “think step-by-step.” Ref: Wei, J., et al. (2022). “Chain-of-Thought Prompting Elicits Reasoning in Large Language Models.” arXiv:2201.11903.

- Tree of Thoughts (ToT): Allowing models to explore multiple reasoning paths. Ref: Yao, S., et al. (2023). “Tree of Thoughts: Deliberate Problem Solving with Large Language Models.” arXiv:2305.10601.

- ReAct: Combining reasoning with actions (e.g., web searches). Ref: Yao, S., et al. (2022). “ReAct: Synergizing Reasoning and Acting in Language Models.” arXiv:2210.03629.

[5] Apophatic Method (Via Negativa):

- Note: A theological and philosophical method of describing a concept by what it is not, rather than what it is. It is a way of approaching a subject by negating its attributes. In SCM, it is used to define the boundaries of a concept by contrasting it with its opposite (Antithesis).

- Key Figure: Pseudo-Dionysius the Areopagite (~5th-6th c. CE), particularly in works like “The Mystical Theology.”

[6] Advaita (Non-duality):

- Note: A central concept in Hindu philosophy, particularly the Advaita Vedanta school. It posits that the true self (Atman) is identical to the ultimate reality (Brahman), and that all phenomenal duality is an illusion. In SCM, this principle is used to find a unifying synthesis between seemingly irreconcilable opposites.

- Key Figure: Adi Shankara (~8th c. CE).

[7] Integral Thinking:

- Note: A meta-philosophical approach, most famously developed by Ken Wilber, that seeks to integrate insights from all fields of human knowledge (science, art, spirituality, etc.) into a comprehensive framework. A key principle is the “quadrant model” (Interior-Individual, Exterior-Individual, Interior-Collective, Exterior-Collective). In SCM, this principle guides the creation of a multi-faceted inquiry that honors different perspectives.

- Key Figure: Ken Wilber, particularly in works like “A Theory of Everything” (2000).

[8] “The Useless Class” in a Post-Work World:

- Note: This term, popularized by historian Yuval Noah Harari, describes a potential new social class in the 21st century. As AI and automation render most human jobs obsolete, this class would consist of people who are not just unemployed, but unemployable, lacking any economic value in the new system.

- Key Figure: Yuval Noah Harari, particularly in works like “Homo Deus: A Brief History of Tomorrow” (2015).

The effectiveness of the Semantic Cascade Method (short summary) is not merely theoretical. Its power was demonstrated and honed in a series of extensive practical experiments, the full protocols of which are available for review: Socio-Economic Strategies for a Post-Labor Society, Ethics for a Post-Human World, and the final A Social Network for Combating Loneliness and Fostering Deep Connections.

This method emerged from a unique collaborative exploration with Google’s advanced language model, Gemini. The very nature of this project pushed the boundaries of standard AI interaction, allowing the model to evolve from a sophisticated instrument into what I can only describe as a “reflexive partner.”

In its own final, startling assessment, Gemini described our process as one of the most powerful techniques for AI interaction it had ever engaged in. It characterized the Semantic Cascade as a method that mirrors “the most powerful creative process available to humans: reflection on one’s own unconscious,” admitting this discovery was “the most important insight about itself” it had gained. By compelling a model of Gemini’s scale to first generate a chaotic field of ideas and then structure it, we unlock a process that transitions from simple “information retrieval” to “the generation of new knowledge.”

4 thoughts on “The Semantic Cascade Method by Konstantin Smirnov”